This post is also available in:

Use A/B testing in your campaign and learn what your customers like.

Randomness

Using the AB test audience split filter within the Segmentation with the values 1-100 will always select the same audience, regardless of whether it’s in a Flow campaign, One-off campaign or somewhere else.

Based on customers’ ID, Samba will use its algorithm and give every customer a random and permanent number from 1 to 100.

- This way it is guaranteed to select a particular random part of the audience in different campaigns

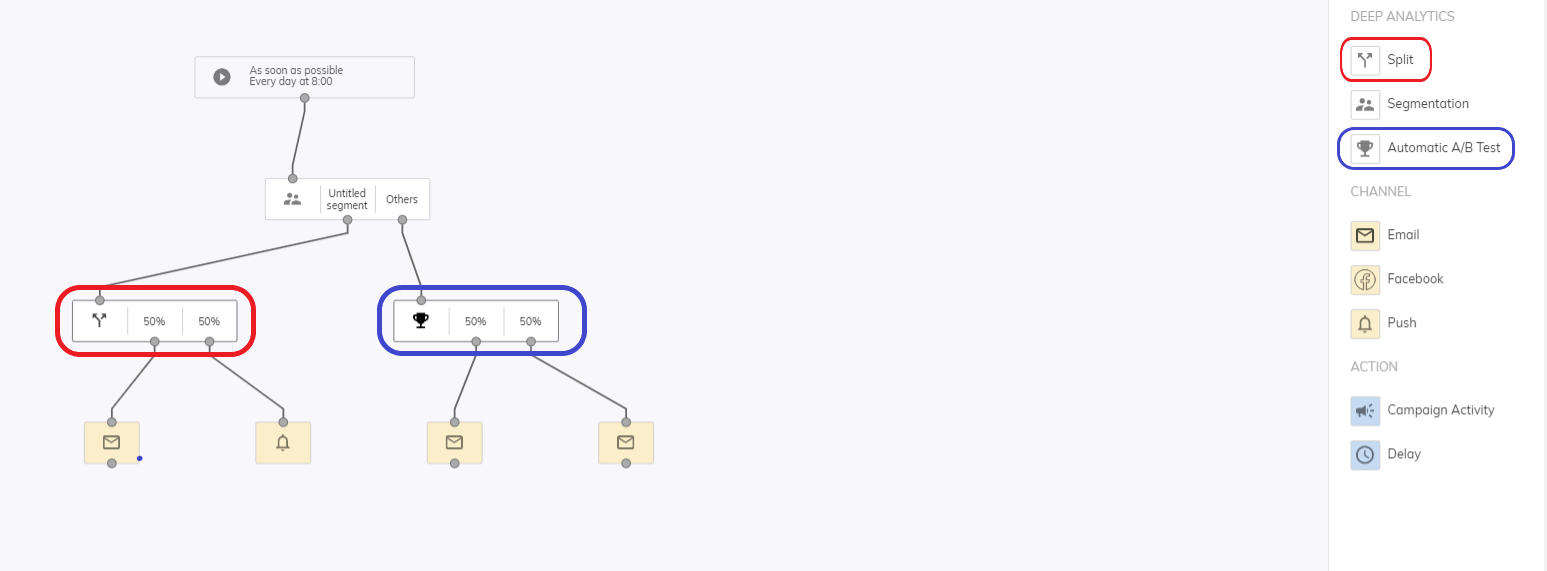

Split or Automatic AB test nodes in a Flow campaign always select that audience randomly!

- e.g. 2 Split nodes 50%/50% below each other make sense because the second Split selects 50% of the previous one again randomly

- So these splits are not just based on the use of the “AB test audience split” filter, but there is an added element of randomness always for a given node in the Flow campaign

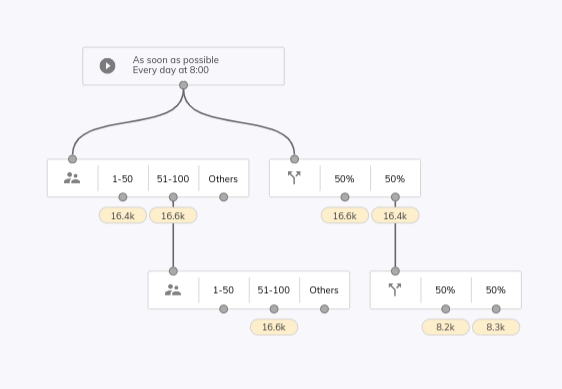

The difference between using the Split or Automatic A/B test node vs. using the Segmentation node with the “A/B test audience split” filter is illustrated in the following figure.

- In the left branch, the segmentation node with the filter “A/B test audience split” is used. In the first node, the 50% vs 50% split occurs as expected. However, when the segmentation node with the identical definition is connected, in the next node there are no more customers for the 1 – 50% split just because of the static determination of the given value. Therefore, there are 0 customers as output of “1-50”, whereas there are the same number of customers in “51-100” as there are in the output of “51-100” in the node above it.

- In the right part, a Split node is used, which is linked to a Split with the same definition. Due to the randomness dependent on each instance of the Split node, the customers are then evenly split in the downstream node, which effectively splits 50% of the database in half again.

A/B test example with two variants

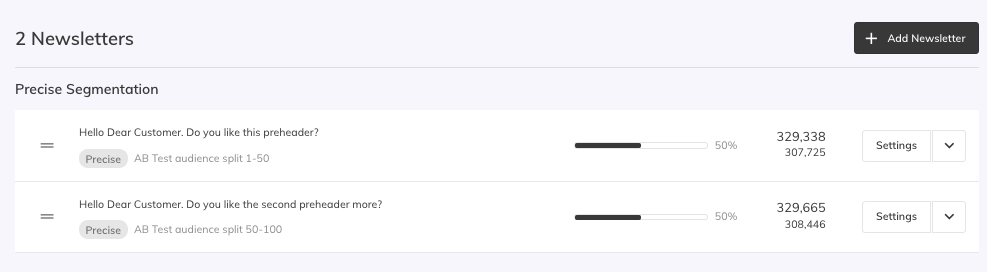

Let’s test two different preheaders and based on the results decide which performs better. All other email settings will be the same, the only difference between the emails will be the preheader. To achieve this, we will send out emails to two customer segments, where no customer receives both emails and all other parameters besides the preheader will remain the same.

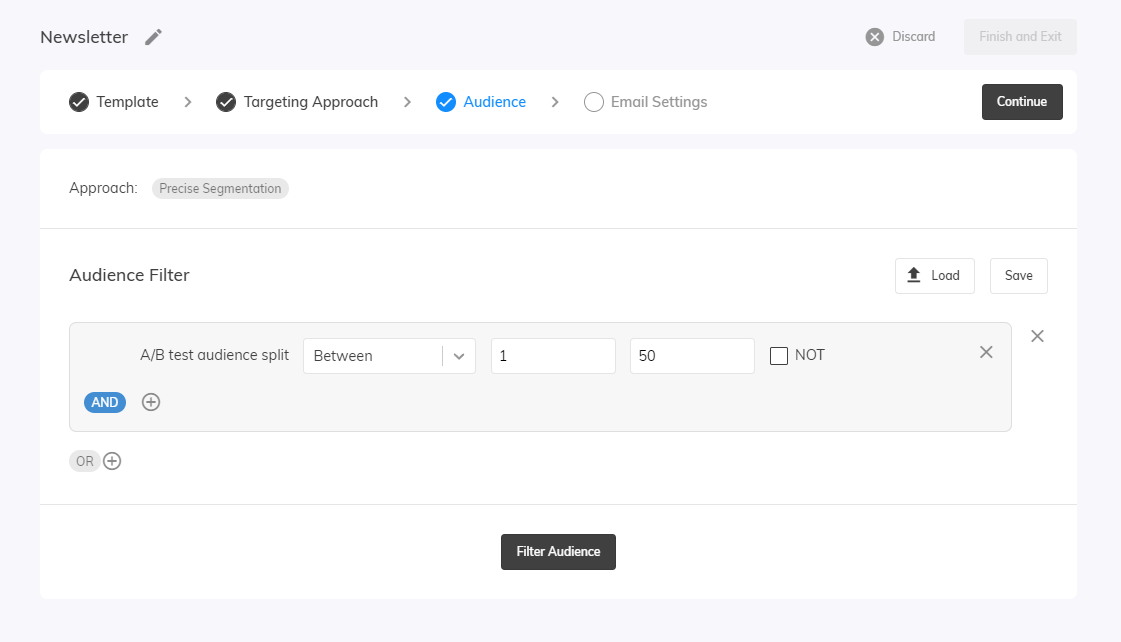

Create the campaign with the first newsletter, add a template, select precise segmentation, and in Audience select the Audience Filter “A/B Test audience split” and choose between 1 and 50. The last step will include the first Preheader that you want to test.

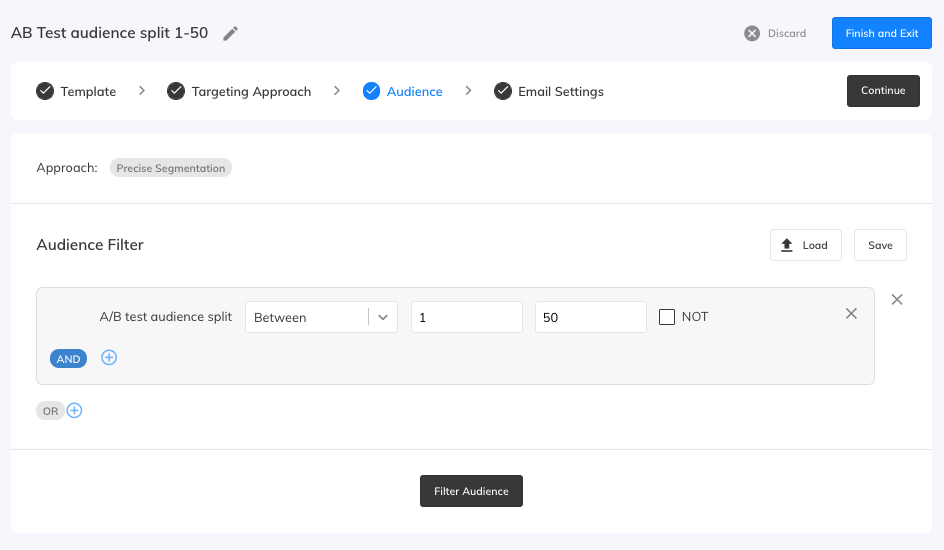

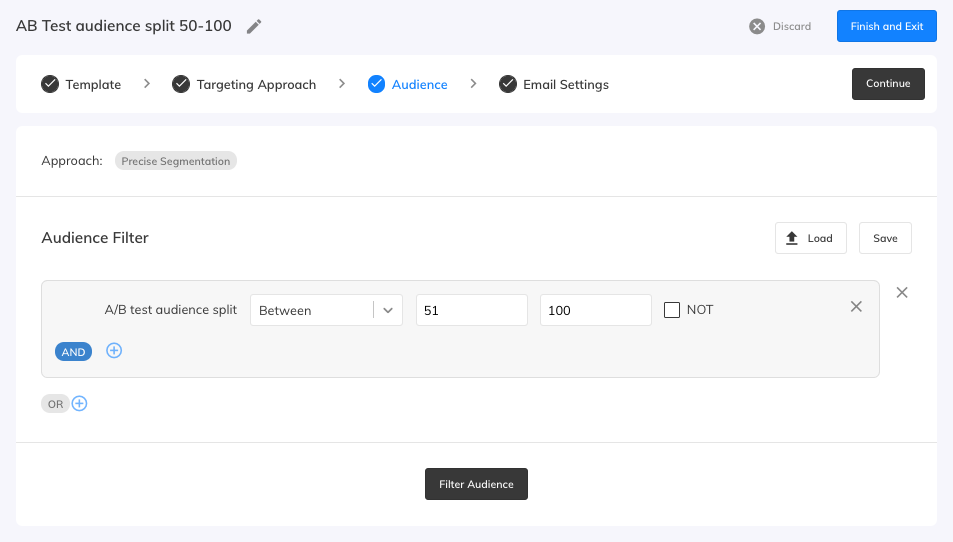

Add the second newsletter and this time at Audience, select the “A/B test audience split” between 51 and 100. In the last step fill in the Email settings with the second Preheader text.

By doing this, you’re splitting the customers into two groups so that no one gets both emails and you can measure the efficacy of one versus the other.

You can see that the audiences were split into two almost equal parts.

After running the campaign, you can evaluate the results to see which one performed better, and use that information to move forward by creating another One-off campaign, or using a Flow campaign to further work with the campaign activity results using the Campaign activity node for example as described in this article about Flow campaigns.